ParentIDCards

Code Implementation of Machine Learning, Deep Learning & Artificial Intelligence Functions

Activation Functions

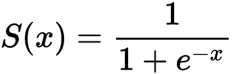

Sigmoid / Logistic Function

import math;

def sigmoid(x):

return 1 / (1 + math.exp(-x))

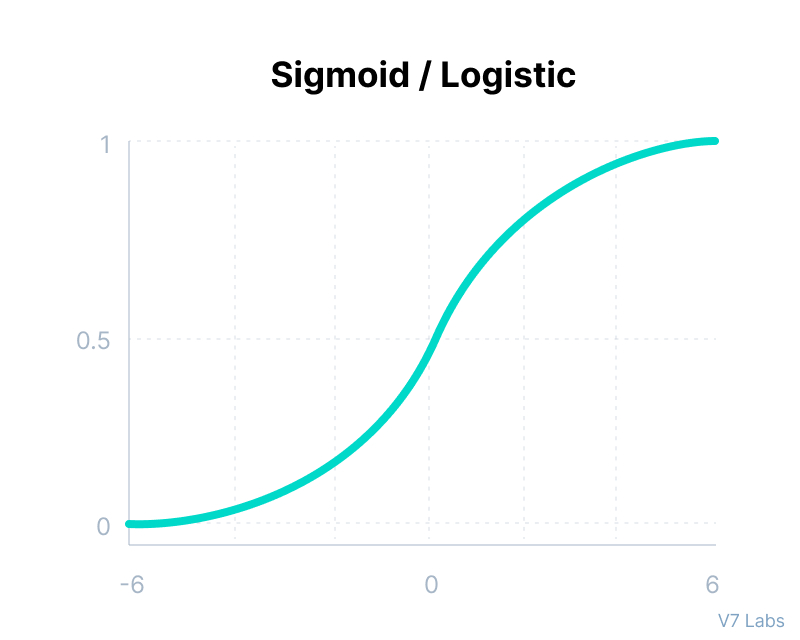

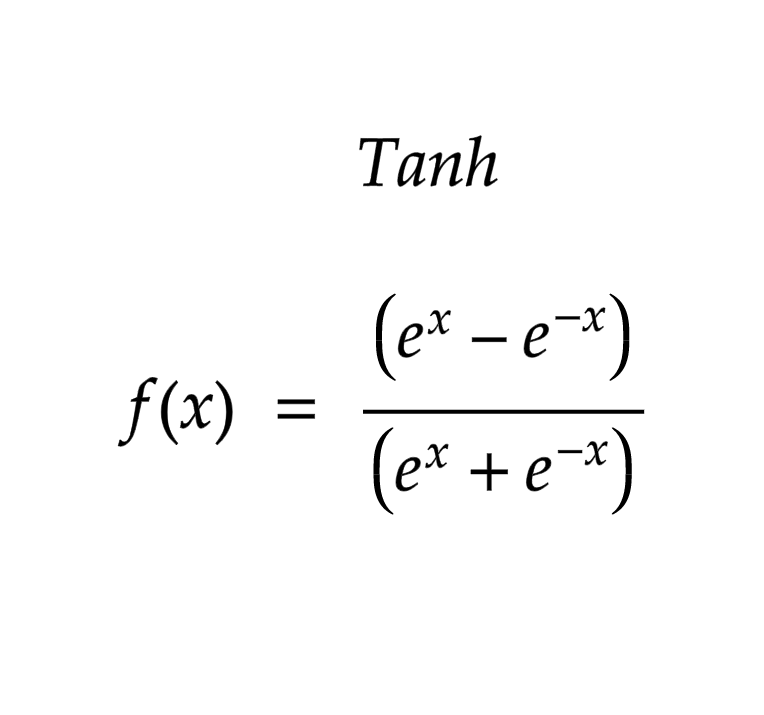

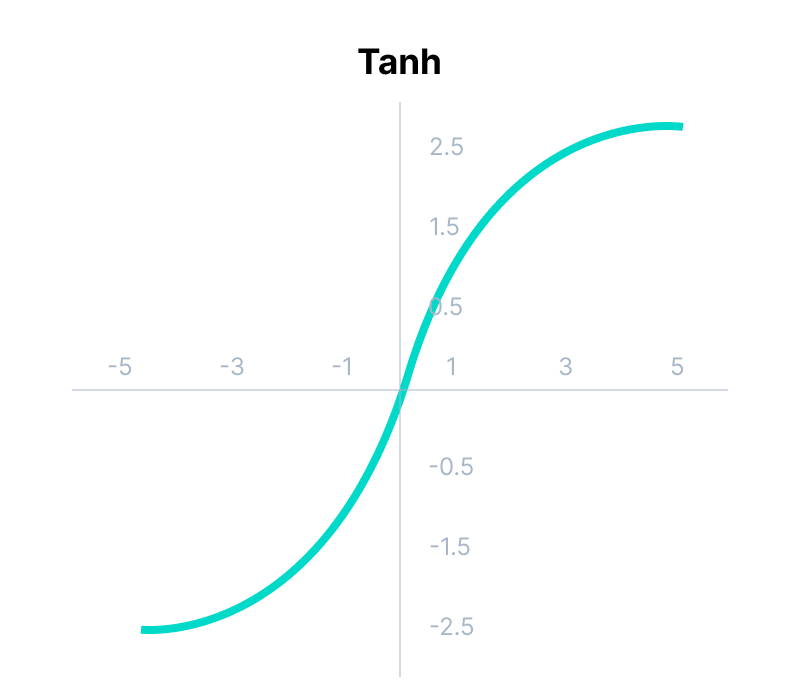

tanh Function

import math;

def tanh(x):

return (math.exp(x) - math.exp(-x)) / (math.exp(x) + math.exp(-x))

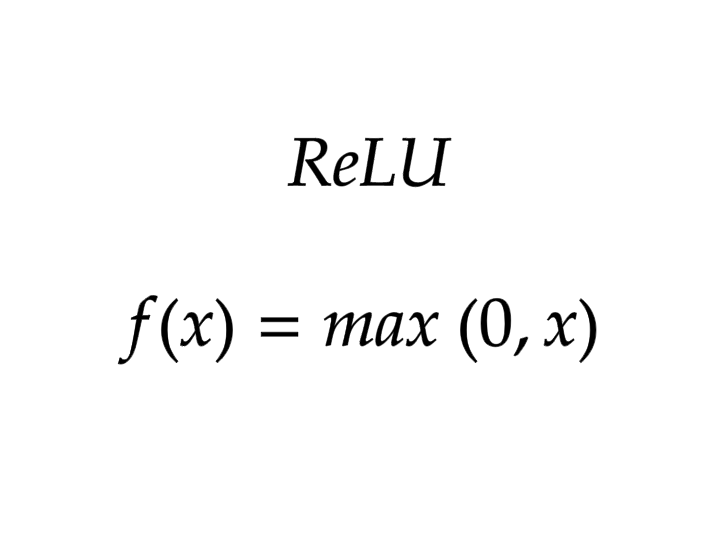

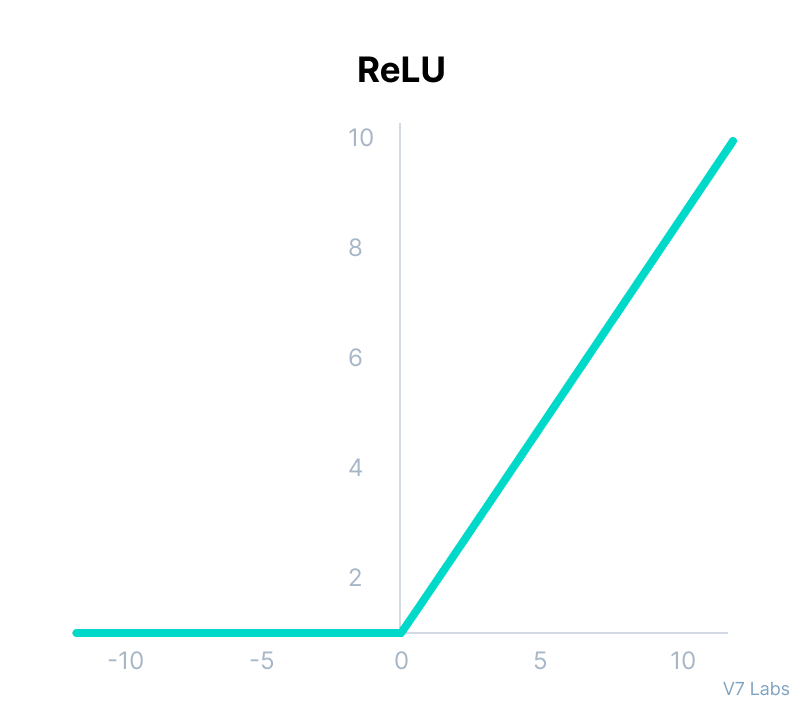

ReLU

import math;

def relu(x):

return max(0,x)

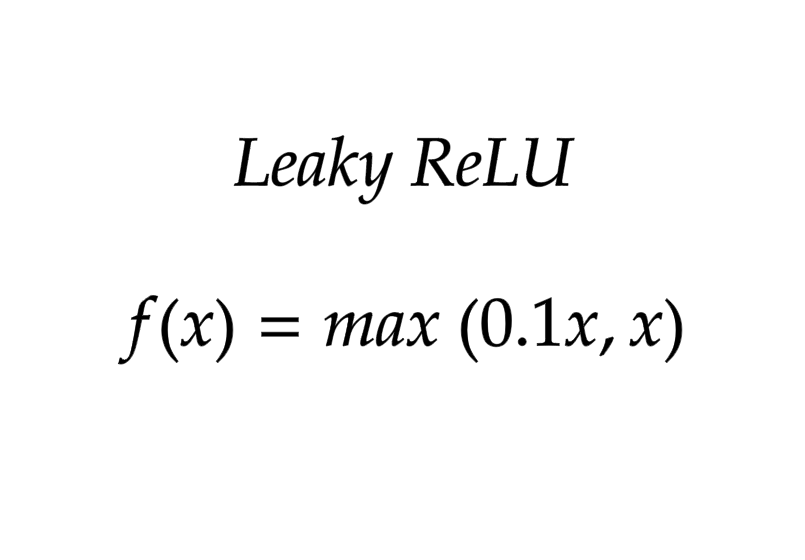

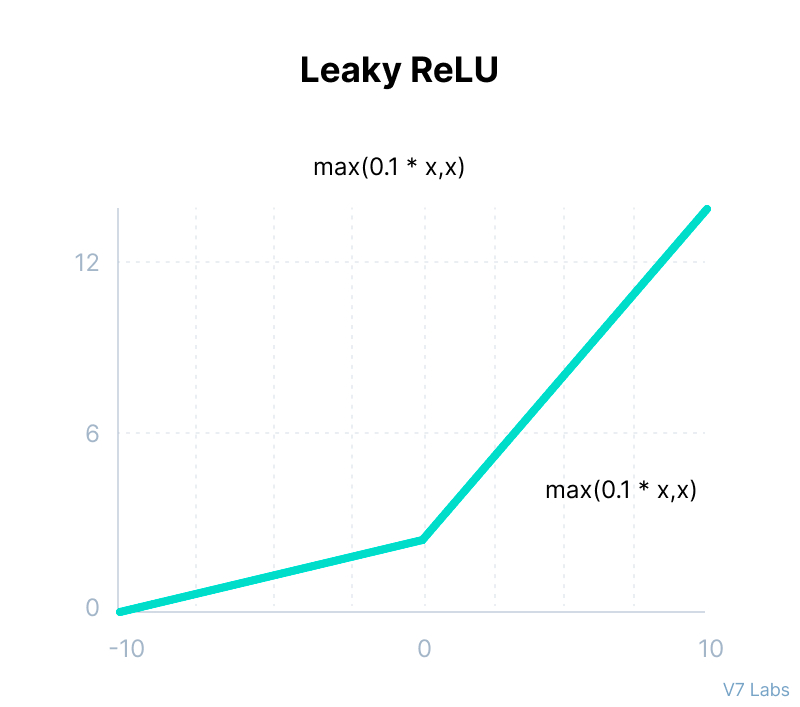

Leaky ReLU

import math;

def leaky_relu(x):

return max(0.1*x,x)

Loss \ Cost Functions

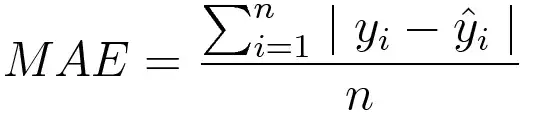

Mean Absolute Error/L1 Loss (Regression Losses)

Mean absolute error, on the other hand, is measured as the average sum of absolute differences between predictions and actual observations. Like MSE, this as well measures the magnitude of error without considering their direction. Unlike MSE, MAE needs more complicated tools such as linear programming to compute the gradients. Plus MAE is more robust to outliers since it does not make use of squares.

# Plain implementation

import numpy as np

y_hat = np.array([0.000, 0.166, 0.333])

y_true = np.array([0.000, 0.254, 0.998])

print("d is: " + str(["%.8f" % elem for elem in y_hat]))

print("p is: " + str(["%.8f" % elem for elem in y_true]))

def mae(predictions, targets):

total_error = 0

for yp, yt in zip(predictions, targets):

total_error += abs(yp - yt)

print("Total error is:",total_error)

mae = total_error/len(predictions)

print("Mean absolute error is:",mae)

return mae

# Usage : mae(predictions, targets)

# Implementation using numpy

import numpy as np

y_hat = np.array([0.000, 0.166, 0.333])

y_true = np.array([0.000, 0.254, 0.998])

print("d is: " + str(["%.8f" % elem for elem in y_hat]))

print("p is: " + str(["%.8f" % elem for elem in y_true]))

def mae_np(predictions, targets):

return np.mean(np.abs(predictions-targets))

mae_val = mae_np(y_hat, y_true)

print ("mae error is: " + str(mae_val))

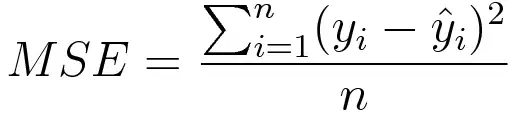

Mean Square Error/Quadratic Loss/L2 Loss (Regression Losses)

Mean square error is measured as the average of the squared difference between predictions and actual observations. It’s only concerned with the average magnitude of error irrespective of their direction. However, due to squaring, predictions that are far away from actual values are penalized heavily in comparison to less deviated predictions. Plus MSE has nice mathematical properties which make it easier to calculate gradients.

# Plain implementation

import numpy as np

y_hat = np.array([0.000, 0.166, 0.333])

y_true = np.array([0.000, 0.254, 0.998])

def rmse(predictions, targets):

total_error = 0

for yt, yp in zip(targets, predictions):

total_error += (yt-yp)**2

print("Total Squared Error:",total_error)

mse = total_error/len(y_true)

print("Mean Squared Error:",mse)

return mse

print("d is: " + str(["%.8f" % elem for elem in y_hat]))

print("p is: " + str(["%.8f" % elem for elem in y_true]))

rmse_val = rmse(y_hat, y_true)

print("rms error is: " + str(rmse_val))

# Implementation using numpy

import numpy as np

y_hat = np.array([0.000, 0.166, 0.333])

y_true = np.array([0.000, 0.254, 0.998])

def rmse(predictions, targets):

return np.mean(np.square(targets-predictions))

print("d is: " + str(["%.8f" % elem for elem in y_hat]))

print("p is: " + str(["%.8f" % elem for elem in y_true]))

rmse_val = rmse(y_hat, y_true)

print("rms error is: " + str(rmse_val))

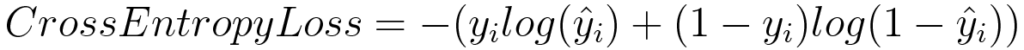

Log Loss or Binary Cross Entropy

import numpy as np

y_predicted = np.array([[0.25,0.25,0.25,0.25],[0.01,0.01,0.01,0.96]])

y_true = np.array([[0,0,0,1],[0,0,0,1]])

def cross_entropy(predictions, targets, epsilon=1e-10):

predictions = np.clip(predictions, epsilon, 1. - epsilon)

N = predictions.shape[0]

ce_loss = -np.sum(np.sum(targets * np.log(predictions + 1e-5)))/N

return ce_loss

cross_entropy_loss = cross_entropy(predictions, targets)

print ("Cross entropy loss is: " + str(cross_entropy_loss))

# OR

def log_loss(predictions, targets, epsilon=1e-10):

predicted_new = [max(i,epsilon) for i in predictions]

predicted_new = [min(i,1-epsilon) for i in predicted_new]

predicted_new = np.array(predicted_new)

return -np.mean(targets*np.log(predicted_new)+(1-y_true)*np.log(1-predicted_new))

For more functions refer ‘Common Loss functions in machine learning’ By Ravindra Parmar

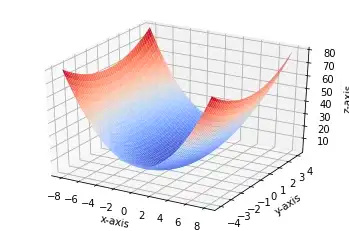

Gradient Descent

Gradient Descent

# Single Feature

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

def gradient_descent(x, y, epochs = 10000, loss_thresold = 0.5, rate = 0.01):

w1 = bias = 0

n = len(x)

plt.scatter(x, y, color='red', marker='+', linewidth='5')

for i in range(epochs):

y_predicted = (w1 * x)+ bias

plt.plot(x, y_predicted, color='green')

md = -(2/n)*sum(x*(y-y_predicted))

yd = -(2/n)*sum(y-y_predicted)

w1 = w1 - rate * md

bias = bias - rate * yd

print ("m {}, b {}, cost {} iteration {}".format(m_curr,b_curr,cost, i))

# Usage

x = np.array([1,2,3,4,5])

y = np.array([5,7,9,11,13])

gradient_descent(x, y, 500)

---

# Multiple Feature

def gradient_descent(x1, x2, y, epochs = 10000, loss_thresold = 0.5, rate = 0.01):

w1 = w2 = bias = 1

rate = 0.5

n = len(x1)

for i in range(epochs):

weighted_sum = (w1 * x1) + (w2 * x2) + bias

y_predicted = sigmoid_numpy(weighted_sum)

loss = log_loss(y_predicted, y)

w1d = (1/n)*np.dot(np.transpose(x1),(y_predicted-y))

w2d = (1/n)*np.dot(np.transpose(x2),(y_predicted-y))

bias_d = np.mean(y_predicted-y)

w1 = w1 - rate * w1d

w2 = w2 - rate * w2d

bias = bias - rate * bias_d

print (f'Epoch:{i}, w1:{w1}, w2:{w2}, bias:{bias}, loss:{loss}')

if loss<=loss_thresold:

break

return w1, w2, bias

# Usage

gradient_descent(

X_train_scaled['age'],

X_train_scaled['affordibility'],

y_train,

1000,

0.4631

)

# custom neural network class

class myNN:

def __init__(self):

self.w1 = 1

self.w2 = 1

self.bias = 0

def sigmoid_numpy(self, X):

import numpy as np;

return 1/(1+np.exp(-X))

def log_loss(self, y_true, y_predicted):

import numpy as np;

epsilon = 1e-15

y_predicted_new = [max(i,epsilon) for i in y_predicted]

y_predicted_new = [min(i,1-epsilon) for i in y_predicted_new]

y_predicted_new = np.array(y_predicted_new)

return -np.mean(y_true*np.log(y_predicted_new)+(1-y_true)*np.log(1-y_predicted_new))

def fit(self, X, y, epochs, loss_thresold):

self.w1, self.w2, self.bias = self.gradient_descent(X['age'],X['affordibility'],y, epochs, loss_thresold)

print(f"Final weights and bias: w1: {self.w1}, w2: {self.w2}, bias: {self.bias}")

def predict(self, X_test):

weighted_sum = self.w1*X_test['age'] + self.w2*X_test['affordibility'] + self.bias

return self.sigmoid_numpy(weighted_sum)

def gradient_descent(self, age,affordability, y_true, epochs, loss_thresold):

import numpy as np;

w1 = w2 = 1

bias = 0

rate = 0.5

n = len(age)

for i in range(epochs):

weighted_sum = w1 * age + w2 * affordability + bias

y_predicted = self.sigmoid_numpy(weighted_sum)

loss = self.log_loss.log_loss(y_true, y_predicted)

w1d = (1/n)*np.dot(np.transpose(age),(y_predicted-y_true))

w2d = (1/n)*np.dot(np.transpose(affordability),(y_predicted-y_true))

bias_d = np.mean(y_predicted-y_true)

w1 = w1 - rate * w1d

w2 = w2 - rate * w2d

bias = bias - rate * bias_d

if i%50==0:

print (f'Epoch:{i}, w1:{w1}, w2:{w2}, bias:{bias}, loss:{loss}')

if loss<=loss_thresold:

print (f'Epoch:{i}, w1:{w1}, w2:{w2}, bias:{bias}, loss:{loss}')

break

return w1, w2, bias

# Usage

customModel = myNN()

customModel.fit(X_train_scaled, y_train, epochs=8000, loss_thresold=0.4631)

# Usage

customModel = myNN()

customModel.fit(

X_train_scaled,

y_train,

epochs=8000,

loss_thresold=0.4631

)

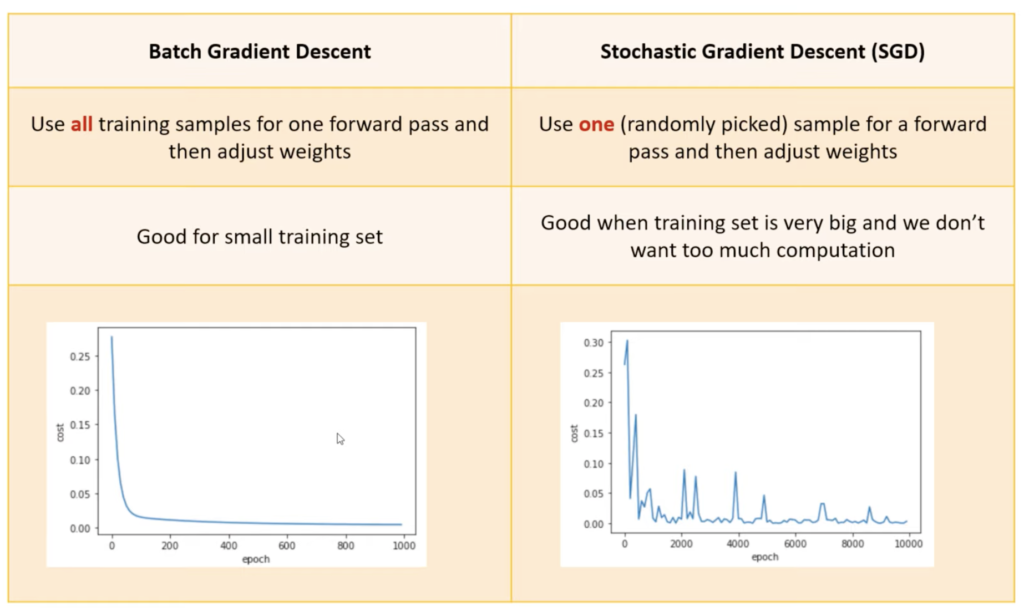

Batch Gradient Descent

def batch_gradient_descent(X, y_true, epochs, learning_rate = 0.01):

number_of_features = X.shape[1]

# numpy array with 1 row and columns equal to number of features. In

# our case number_of_features = 2 (area, bedroom)

w = np.ones(shape=(number_of_features))

b = 0

total_samples = X.shape[0] # number of rows in X

cost_list = []

epoch_list = []

for i in range(epochs):

y_predicted = np.dot(w, X.T) + b

w_grad = -(2/total_samples)*(X.T.dot(y_true-y_predicted))

b_grad = -(2/total_samples)*np.sum(y_true-y_predicted)

w = w - learning_rate * w_grad

b = b - learning_rate * b_grad

cost = np.mean(np.square(y_true-y_predicted)) # MSE (Mean Squared Error)

if i%10==0:

cost_list.append(cost)

epoch_list.append(i)

return w, b, cost, cost_list, epoch_list

w, b, cost, cost_list, epoch_list = batch_gradient_descent(

scaled_X,

scaled_y.reshape(scaled_y.shape[0],),

500

)

w, b, cost

Mini Batch Gradient Descent

def mini_batch_gradient_descent(X, y_true, epochs = 100, batch_size = 5, learning_rate = 0.01):

number_of_features = X.shape[1]

# numpy array with 1 row and columns equal to number of features. In

# our case number_of_features = 3 (area, bedroom and age)

w = np.ones(shape=(number_of_features))

b = 0

total_samples = X.shape[0] # number of rows in X

if batch_size > total_samples: # In this case mini batch becomes same as batch gradient descent

batch_size = total_samples

cost_list = []

epoch_list = []

num_batches = int(total_samples/batch_size)

for i in range(epochs):

random_indices = np.random.permutation(total_samples)

X_tmp = X[random_indices]

y_tmp = y_true[random_indices]

for j in range(0,total_samples,batch_size):

Xj = X_tmp[j:j+batch_size]

yj = y_tmp[j:j+batch_size]

y_predicted = np.dot(w, Xj.T) + b

w_grad = -(2/len(Xj))*(Xj.T.dot(yj-y_predicted))

b_grad = -(2/len(Xj))*np.sum(yj-y_predicted)

w = w - learning_rate * w_grad

b = b - learning_rate * b_grad

cost = np.mean(np.square(yj-y_predicted)) # MSE (Mean Squared Error)

if i%10==0:

cost_list.append(cost)

epoch_list.append(i)

return w, b, cost, cost_list, epoch_list

w, b, cost, cost_list, epoch_list = mini_batch_gradient_descent(

scaled_X,

scaled_y.reshape(scaled_y.shape[0],),

epochs = 120,

batch_size = 5

)

w, b, cost

Stochastic Gradient Descent

def stochastic_gradient_descent(X, y_true, epochs, learning_rate = 0.01):

number_of_features = X.shape[1]

# numpy array with 1 row and columns equal to number of features. In

# our case number_of_features = 3 (area, bedroom and age)

w = np.ones(shape=(number_of_features))

b = 0

total_samples = X.shape[0]

cost_list = []

epoch_list = []

for i in range(epochs):

random_index = random.randint(0,total_samples-1) # random index from total samples

sample_x = X[random_index]

sample_y = y_true[random_index]

y_predicted = np.dot(w, sample_x.T) + b

w_grad = -(2/total_samples)*(sample_x.T.dot(sample_y-y_predicted))

b_grad = -(2/total_samples)*(sample_y-y_predicted)

w = w - learning_rate * w_grad

b = b - learning_rate * b_grad

cost = np.square(sample_y-y_predicted)

if i%100==0: # at every 100th iteration record the cost and epoch value

cost_list.append(cost)

epoch_list.append(i)

return w, b, cost, cost_list, epoch_list

w_sgd, b_sgd, cost_sgd, cost_list_sgd, epoch_list_sgd = SGD(

scaled_X,

scaled_y.reshape(scaled_y.shape[0],),

10000

)

w_sgd, b_sgd, cost_sgd

JSON Data in SQL Server

The write way to declare JSON column

- min NVARCHAR(4000) is required to identify as JSON DATA

CREATE TABLE BLOG

(

blogID INT IDENTITY(1,1),

blogTitle NVARCHAR(100),

blogText NVARCHAR(MAX),

blogDetails NVARCHAR(4000)

CONSTRAINT pk_blog_blodID PRIMARY KEY(blogID),

CONSTRAINT chk_blog_blogDetails CHECK(ISJSON(blogDetails) = 1 )

)

JSON_VALUE(<field>,<path>)

JSON_QUERY(<field>,<path>)

JSON_MODIFY(<field>,<path>,<new value>)

SELECT * FROM OPENROWSET(BULK N’C:\data\data.json’ SINGLE_CLOB) as jData

— returns a table with ‘BulkColumn’ field

OPENJSON & CROSSAPPLY

SELECT * FROM OPENROWSET(BULK N’C:\data\data.json’ SINGLE_CLOB) as jData

CROSS APPLY OPENJSON(BulkColumn)

Ultimate Calendar Table by Avi Singh

let

/*

****This Calendar was created and provided by Avi Singh****

****This can be freely shared as long as this text comment is retained.****

http://www.youtube.com/PowerBIPro

www.LearnPowerBI.com by Avi Singh

*/

#"LearnPowerBI.com by Avi Singh" = 1,

StartDate = #date(2014, 1, 1),

EndDate = Date.EndOfYear(DateTime.Date(DateTime.FixedLocalNow())) /*was "#date(2017, 1, 1)" Updated on 201802027: hard Coded End of Year caused some formulas to break, switching to dynamic date*/,

//Used for 'Offset' Column calculations, you may Hard code CurrentDate for testing e.g. #date(2017,9,1)

CurrentDate = DateTime.Date(DateTime.FixedLocalNow()),

// Specify the last month in your Fiscal Year, e.g. if June is the last month of your Fiscal Year, specify 6

FiscalYearEndMonth = 6,

#"==SET PARAMETERS ABOVE==" = 1,

#"==Build Date Column==" = #"==SET PARAMETERS ABOVE==",

ListDates = List.Dates(StartDate, Number.From(EndDate - StartDate)+1, #duration(1,0,0,0)),

#"Converted to Table" = Table.FromList(ListDates, Splitter.SplitByNothing(), null, null, ExtraValues.Error),

#"Renamed Columns as Date" = Table.RenameColumns(#"Converted to Table",{{"Column1", "Date"}}),

// As far as Power BI is concerned, the 'Date' column is all that is needed :-) But we will continue and add a few Human-Friendly Columns

#"Changed Type to Date" = Table.TransformColumnTypes(#"Renamed Columns as Date",{{"Date", type date}}),

#"==Add Calendar Columns==" = #"Changed Type to Date",

#"Added Calendar MonthNum" = Table.AddColumn(#"==Add Calendar Columns==", "MonthNum", each Date.Month([Date]), Int64.Type),

#"Added Month Name" = Table.AddColumn(#"Added Calendar MonthNum", "Month", each Text.Start(Date.MonthName([Date]),3), type text),

#"Added Month Name Long" = Table.AddColumn(#"Added Month Name", "MonthLong", each Date.MonthName([Date]), type text),

#"Added Calendar Quarter" = Table.AddColumn(#"Added Month Name Long", "Quarter", each "Q" & Text.From(Date.QuarterOfYear([Date]))),

#"Added Calendar Year" = Table.AddColumn(#"Added Calendar Quarter", "Year", each Date.Year([Date]), Int64.Type),

#"==Add Fiscal Calendar Columns==" = #"Added Calendar Year",

#"Added FiscalMonthNum" = Table.AddColumn(#"==Add Fiscal Calendar Columns==", "FiscalMonthNum", each if [MonthNum] > FiscalYearEndMonth

then [MonthNum] - FiscalYearEndMonth

else [MonthNum] + (12 - FiscalYearEndMonth), type number),

#"Added FiscalMonth Name" = Table.AddColumn(#"Added FiscalMonthNum", "FiscalMonth", each [Month]),

#"Added FiscalMonth Name Long" = Table.AddColumn(#"Added FiscalMonth Name", "FiscalMonthLong", each [MonthLong]),

#"Added FiscalQuarter" = Table.AddColumn(#"Added FiscalMonth Name Long", "FiscalQuarter", each "FQ" & Text.From(Number.RoundUp([FiscalMonthNum] / 3,0))),

#"Added FiscalYear" = Table.AddColumn(#"Added FiscalQuarter", "FiscalYear", each "FY" &

Text.End(

Text.From(

if [MonthNum] > FiscalYearEndMonth

then [Year] + 1

else [Year]

)

, 2

)),

#"==Add Calendar Date Offset Columns==" = #"Added FiscalYear",

// Can be used to for example to show the past 3 months(CurMonthOffset = 0, -1, -2)

#"Added CurMonthOffset" = Table.AddColumn(#"==Add Calendar Date Offset Columns==", "CurMonthOffset", each ( Date.Year([Date]) - Date.Year(CurrentDate) ) * 12

+ Date.Month([Date]) - Date.Month(CurrentDate), Int64.Type),

// Can be used to for example to show the past 3 quarters (CurQuarterOffset = 0, -1, -2)

#"Added CurQuarterOffset" = Table.AddColumn(#"Added CurMonthOffset", "CurQuarterOffset", each /*Year Difference*/

( Date.Year([Date]) - Date.Year(CurrentDate) )*4

/*Quarter Difference*/

+ Number.RoundUp(Date.Month([Date]) / 3)

- Number.RoundUp(Date.Month(CurrentDate) / 3),

Int64.Type),

// Can be used to for example to show the past 3 years (CurYearOffset = 0, -1, -2)

#"Added CurYearOffset" = Table.AddColumn(#"Added CurQuarterOffset", "CurYearOffset", each Date.Year([Date]) - Date.Year(CurrentDate), Int64.Type),

// Can be used to for example filter out all future dates

#"Added FutureDate Flag" = Table.AddColumn(#"Added CurYearOffset", "FutureDate", each if [Date] > CurrentDate then "Future" else "Past" ),

// FiscalYearOffset is the only Offset that is different.

// FiscalQuarterOffset = is same as CurQuarterOffset

// FiscalMonthOffset = is same as CurMonthOffset

#"==Add FiscalYearOffset==" = #"Added FutureDate Flag",

#"Filtered Rows to CurrentDate" = Table.SelectRows(#"==Add FiscalYearOffset==", each ([Date] = CurrentDate)),

CurrentFiscalYear = #"Filtered Rows to CurrentDate"{0}[FiscalYear],

#"Continue...Orig Table" = #"==Add FiscalYearOffset==",

#"Added CurFiscalYearOffset" = Table.AddColumn(#"Continue...Orig Table", "CurFiscalYearOffset", each Number.From(Text.Range([FiscalYear],2,2)) -

Number.From(Text.Range(CurrentFiscalYear,2,2))

/*Extract the numerical portion, e.g. FY18 = 18*/),

#"==Add General Columns==" = #"Added CurFiscalYearOffset",

// Used as 'Sort by Column' for MonthYear columns

#"Added MonthYearNum" = Table.AddColumn(#"==Add General Columns==", "MonthYearNum", each [Year]*100 + [MonthNum] /*e.g. Sep-2016 would become 201609*/, Int64.Type),

#"Added MonthYear" = Table.AddColumn(#"Added MonthYearNum", "MonthYear", each [Month] & "-" & Text.End(Text.From([Year]),2)),

#"Added MonthYearLong" = Table.AddColumn(#"Added MonthYear", "MonthYearLong", each [Month] & "-" & Text.From([Year])),

#"Added WeekdayNum" = Table.AddColumn(#"Added MonthYearLong", "WeekdayNum", each Date.DayOfWeek([Date]), Int64.Type),

#"Added Weekday Name" = Table.AddColumn(#"Added WeekdayNum", "Weekday", each Text.Start(Date.DayOfWeekName([Date]),3), type text),

#"Added WeekdayWeekend" = Table.AddColumn(#"Added Weekday Name", "WeekdayWeekend", each if [WeekdayNum] = 0 or [WeekdayNum] = 6

then "Weekend"

else "Weekday"),

#"==Improve Ultimate Table" = #"Added WeekdayWeekend",

#"----Add WeekSequenceNum----" = #"==Improve Ultimate Table",

#"Filtered Rows Sundays Only (Start of Week)" = Table.SelectRows(#"----Add WeekSequenceNum----", each ([WeekdayNum] = 0)),

#"Added Index WeekSequenceNum" = Table.AddIndexColumn(#"Filtered Rows Sundays Only (Start of Week)", "WeekSequenceNum", 2, 1),

#"Merged Queries Ultimate Table to WeekSequenceNum" = Table.NestedJoin(#"==Improve Ultimate Table",{"Date"},#"Added Index WeekSequenceNum",{"Date"},"Added Index WeekNum",JoinKind.LeftOuter),

#"Expanded Added Index WeekNum" = Table.ExpandTableColumn(#"Merged Queries Ultimate Table to WeekSequenceNum", "Added Index WeekNum", {"WeekSequenceNum"}, {"WeekSequenceNum"}),

// somehow it ends up being unsorted after Expand Column, should not matter for the end table, but makes it harder to debug and check everything is correct. Thus sorting it.

#"ReSorted Rows by Date" = Table.Sort(#"Expanded Added Index WeekNum",{{"Date", Order.Ascending}}),

#"Filled Down WeekSequenceNum" = Table.FillDown(#"ReSorted Rows by Date",{"WeekSequenceNum"}),

#"Replaced Value WeekSequenceNum null with 1" = Table.ReplaceValue(#"Filled Down WeekSequenceNum",null,1,Replacer.ReplaceValue,{"WeekSequenceNum"}),

#"Inserted Start of Week (WeekDate)" = Table.AddColumn(#"Replaced Value WeekSequenceNum null with 1", "WeekDate", each Date.StartOfWeek([Date]), type date),

// Added 2019-Oct

#"Inserted Week of Year" = Table.AddColumn(#"Inserted Start of Week (WeekDate)", "Week of Year", each Date.WeekOfYear([Date]), Int64.Type),

#"----WeekSequenceNum Complete----" = #"Inserted Week of Year",

Current_WeekSequenceNum = #"----WeekSequenceNum Complete----"{[Date = CurrentDate]}?[WeekSequenceNum],

#"Added Custom CurWeekOffset" = Table.AddColumn(#"----WeekSequenceNum Complete----", "CurWeekOffset", each [WeekSequenceNum] - Current_WeekSequenceNum, Int64.Type),

// Adding a DayofYear 1 to 365

// And YTD, QTD, MTD Columns (can help with showing YTD Numbers across multiple years)

#"==Updates 2019-Feb DayofYear and YTD QTD MTD Columns" = #"Added Custom CurWeekOffset",

// This maybe useful in some DAX Calculations

#"Inserted Day of Year" = Table.AddColumn(#"==Updates 2019-Feb DayofYear and YTD QTD MTD Columns", "Day of Year", each Date.DayOfYear([Date]), Int64.Type),

#"Added Flag_YTD" = Table.AddColumn(#"Inserted Day of Year", "Flag_YTD", each if Date.DayOfYear([Date]) <= Date.DayOfYear(CurrentDate)

then "YTD"

else null),

#"Added Flag_MTD" = Table.AddColumn(#"Added Flag_YTD", "Flag_MTD", each if Date.Day([Date]) <= Date.Day(CurrentDate)

then "MTD"

else null),

#"Added Flag_QTD" = Table.AddColumn(#"Added Flag_MTD", "Flag_QTD", each //Compare Month Number in Quarter (1,2,3) for [Date] and CurrentDate

if Number.Mod(Date.Month([Date])-1, 3) + 1

<= Number.Mod(Date.Month(CurrentDate)-1, 3) + 1

then "QTD"

else null),

#"==Update 2019-Mar CurrentDatOffset" = #"Added Flag_QTD",

#"Added CurrentDayOffset" = Table.AddColumn(#"==Update 2019-Mar CurrentDatOffset", "CurrentDayOffset", each [Date] - CurrentDate),

#"Changed Type1" = Table.TransformColumnTypes(#"Added CurrentDayOffset",{{"CurrentDayOffset", Int64.Type}})

in

#"Changed Type1"

Generate Domain CA validated SSL for PowerSchool Test Server

Scenario

I want to add a proper SSL certificate to my PowerSchool test server which is running inside my local domain (AD Joined) using open SSL.

Assumption

- domain name : school.local

- powerschool test server name : abc001 (abc001.school.local)

- internal local microsoft server action as local CA : ca.school.local

Export Internal Root CA with Private Key from Microsoft Certificate

In order to validate the certificate for the PowerSchool test server within the local domain, we need to get the rootCA certificate and key

- Log on to the Domain Controller / certificate server that has the target Certificate Authority installed.

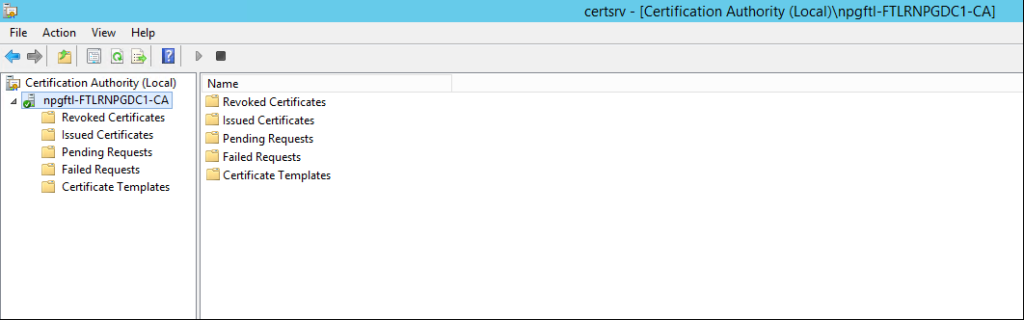

- Open the Certificate Authority MMC (run certsrv.msc).

- Right-click the CA name in the tree (“npgftl-FTLRNPGDC1-CA” in the example), and select All Tasks > Back up CA.

- On the Certification Authority Backup Wizard screen click Next

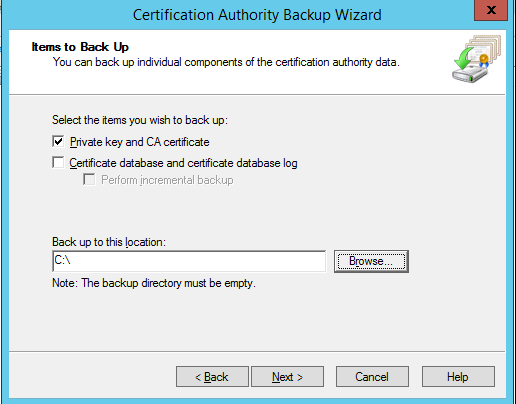

- On the Items to Back Up page, select Private key and CA certificate, enter a location in which to save the file, and click Next.

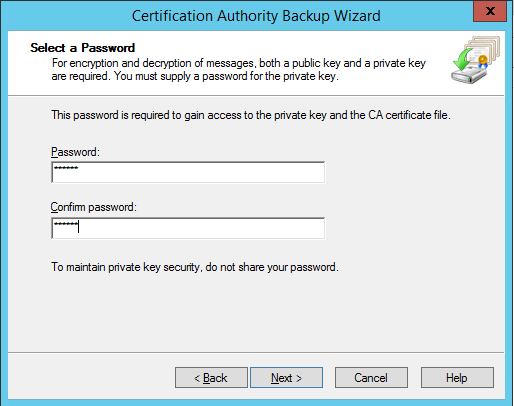

- On the Select, a Password page, enter a password and confirm it. This password will be required when processing and importing the key into another server.

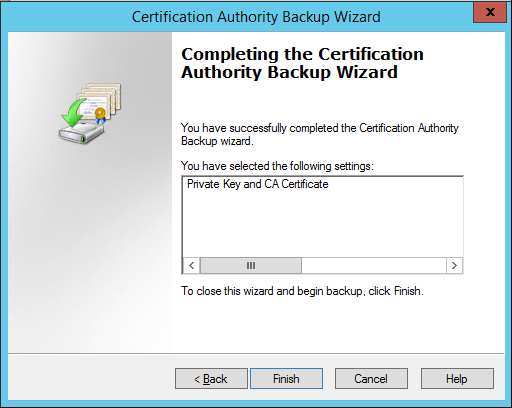

- Click Next and then Finish. When the process is complete, you will have a .p12 file (example CA_name.p12) file in the folder you specified. This file contains both the public key and private key for the certificate.

- From the backup location get the rootCA.p12 file and to extract public/private key from a PKCS#12 container (Generate .key and .crt from PKCS12 file)

# PKCS#1 Private key

openssl pkcs12 -in rootCA.p12 -nocerts -out rootCA-key.pem

# Certificates

openssl pkcs12 -in rootCA.p12 -clcerts -nokeys -out rootCA-cert.pem

Generate configuration file ‘abc001.school.local.csr.cnf’

Create a CSR (Certificate Signing request) using a notepad similar as below

[req]

default_bits = 2048

prompt = no

default_md = sha256

distinguished_name = dn

[dn]

C=BH

ST=Riffa

L=Riffa

O=ABC School Bahrain

OU=PowerSchool

emailAddress=admin@abc.com

CN= abc001.school.local

Create a v3.ext file with a list of local SAN domains v3.ext

authorityKeyIdentifier=keyid,issuer

basicConstraints=CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1=abc001.school.local

DNS.2=172.168.10.160

Create a private key and certificate-signing request (CSR) for the abc001.school.local certificate

openssl req -new -sha256 -nodes -out abc001.school.local.csr

-newkey rsa:2048 -keyout abc001.school.local.key

-config abc001.school.local.csr.cnf

Issue a certificate via the root SSL certificate and the CSR created earlier

openssl x509 -req -in abc001.school.local.csr -CA rootCA-cert.pem

-CAkey rootCA-key.pem -CAcreateserial -out abc001.school.local.crt

-days 500 -sha256 -extfile v3.ext

Convert generated PKCS8 Format Key to Traditional RSA key format

PowerSchool will only accept RSA based key.

openssl rsa -in abc001.school.local.key -text

-out abc001.school.local_rsa.key

#Traditional RSA key format

------ BEGIN RSA PRIVATE KEY-----

[...]

-----END RSA PRIVATE KEY-----

# PKCS8 Format

------ BEGIN PRIVATE KEY-----

[...]

-----END PRIVATE KEY-----

Resources

- [How to Create Trusted Self-Signed SSL Certificates and Local Domains for Testing](https://medium.com/better-programming/trusted-self-signed-certificate-and-local-domains-for-testing-7c6e6e3f9548)

- [How to Export Internal Root CA with Private Key from Microsoft Certificate](https://support.citrix.com/article/CTX224970)

- [Create Your Own Self Signed X509 Certificate](https://www.youtube.com/watch?v=1xtBkukWiek)

- [Create & sign SSL/TLS certificates with openssl](https://www.youtube.com/watch?v=7YgaZIFn7mY)

# Additional Notes

# Merge Key (pem) and Certificate (Pem) to a single file (pkcs12)

openssl pkcs12 -export

-in my-cert.pem -inkey my-key.pem

-out my-pfx-cer.pfx

Hidden Admin User On Mac

dscl . list /Users | grep -v '^_'

dscacheutil -q user | grep -A 3 -B 2 -e name:/ aRoot

echo -n 'Password: '; read -s password

echo; echo; echo "entered password is '$password'"; echo; echo

sudo dscl . -create /Users/aRoot IsHidden 1

sudo dscl . -create /Users/aRoot UserShell /bin/bash

sudo dscl . -create /Users/aRoot NFSHomeDirectory /Local/Users/aRoot

sudo dscl . -create /Users/aRoot RealName 'ASB Admin'

sudo dscl . -create /Users/aRoot UniqueID 401

sudo dscl . -create /Users/aRoot PrimaryGroupID 400

sudo dscl . -passwd /Users/aRoot $password

sudo dscl . -append /groups/admin GroupMembership aRoot

dscl . -read /groups/admin GroupMembership

echo -n 'HostName: '

read hostname

sudo scutil --set HostName $hostname

sudo scutil --set LocalHostName $hostname

sudo scutil --set ComputerName $hostname

dscacheutil -flushcache

Some Additional Steps Before Removing MDM

# Get detailed user info on a aRoot user

dscl . -read /Users/aRoot

# List all admin users

dscl . read /Groups/admin | grep GroupMembership

# make the user an Administrator

sudo dscl . -merge /Groups/admin GroupMembership aRoot

# grab all users over UID 500

dscl . list /Users UniqueID | awk '$2 > 500 { print $1 }'

# changin password

echo -n 'Password: '; read -s password

echo; echo; echo "entered password is '$password'"; echo; echo

sudo dscl . -passwd /Users/aRoot $password